Artificial Intelligence

The EU AI Act: What Do I Need to Consider?

Learn everything about the EU AI Act – the first comprehensive AI regulation in the EU. Discover the new rules, risk categories, and requirements for businesses. Prepare for implementation and ensure your company's AI compliance!

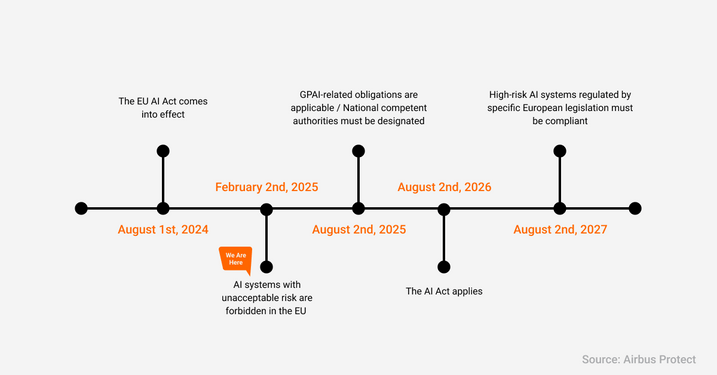

On February 2, 2025, a significant part of the EU AI Act came into force. As an important regulatory element for the use of Artificial Intelligence within the European Union, this legislation was proposed by the European Commission in 2021 and is now gradually becoming legally binding. In fact, the EU draft law will be fully applicable on August 2, 2026.

The EU AI Act is a significant new development for AI systems, AI companies, but also for companies using AI tools. In this article, we provide an overview of the law, its implications, and how we at OMQ are implementing the EU AI Act.

The EU AI Act: A Definition

The EU AI Act is the first comprehensive legal framework for AI systems within the EU. It was proposed by the European Commission in April 2021 and adopted by the European Parliament in March 2024. The final approval by the EU Council took place in May 2024. This legislation addresses the risks associated with AI and aims to establish Europe as a leader in AI governance.

Overview AI Act.

The aim of this regulation is to improve the functioning of the internal market and promote the introduction of human-centered and trustworthy artificial intelligence (AI) while ensuring a high level of protection of health, safety, fundamental rights, including democracy, the rule of law, and environmental protection, from the harmful effects of AI systems in the Union and supporting innovation.Institute for the Future of Life, 2025

The regulation sets uniform rules for the development, deployment, and monitoring of AI systems in the EU. It includes bans on certain practices, special requirements for high-risk AI systems, as well as transparency regulations. It also regulates market surveillance, promotes innovation, and especially supports small and medium-sized enterprises.

Categories of the EU AI Act.

Who Is Affected by the EU AI Act?

The regulation applies to:

- Providers who place AI systems on the market or put them into operation within the Union or place AI models for general purposes on the market, regardless of whether these providers are established or based in the Union or a third country;

- Operators of AI systems that are established or based in the Union;

- Providers and operators of AI systems based in a third country if the output generated by the AI system is used within the Union;

- Importers and distributors of AI systems;

- Manufacturers who place or put into operation an AI system with their product under their name or brand;

- Authorized representatives of service providers not established in the Union;

- Affected persons who are located in the Union.

In short, the regulation applies to all players who develop, distribute, or use AI systems in the EU, regardless of whether they are based in the EU or in a third country. This includes providers and operators of AI systems, importers and distributors, as well as manufacturers integrating AI into their products.

The regulation also applies to companies outside the EU if their AI models or their results are used in the EU. Additionally, it protects affected individuals within the EU.

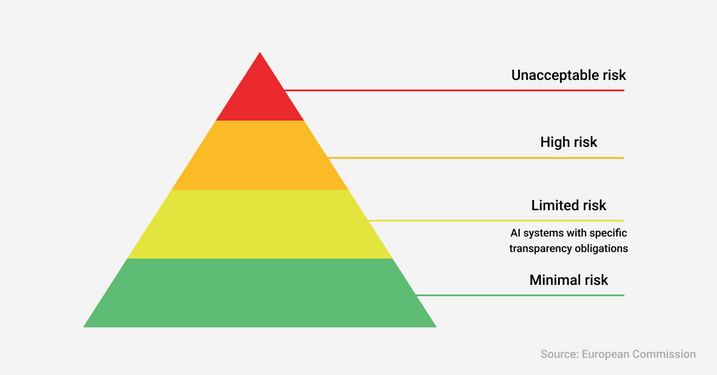

Risk Categories of the EU AI Act

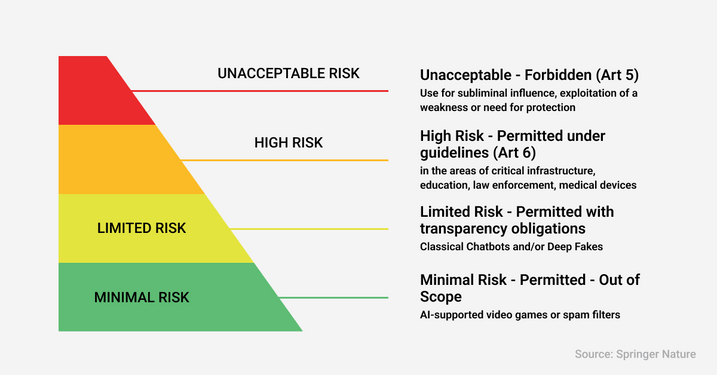

The Act classifies AI applications into four risk levels: unacceptable, high, limited, and minimal risk. An additional category includes general AI applications.

Definitions of the risk categories.

Unacceptable Risk

AI systems that are deemed unacceptably dangerous and are banned, such as:

- Manipulative techniques for behavioral control

- Social credit systems (e.g., social scoring like in China)

- Real-time facial recognition in public spaces (with few exceptions)

- Emotion recognition in workplaces or educational institutions

High Risk

AI applications that have significant impacts on health, safety, or fundamental rights and are subject to strict requirements. These include, for example:

- Critical infrastructure (e.g., traffic control, energy supply)

- Educational and personnel evaluation systems (e.g., AI-based exam assessments)

- Personnel management and recruitment processes (automated screening of job applications)

- Creditworthiness assessments and access to essential services

- Law enforcement and justice (e.g., crime prediction)

Limited Risk

AI systems with certain risks that are subject to transparency requirements. Examples include:

- Chatbots must indicate that users are interacting with AI

- AI-generated content must be clearly labeled as such

Minimal or No Risk

Most AI applications that do not pose significant risks to citizens fall into this category. These include:

- AI-assisted video games

- Spam filters

- Recommendation algorithms for streaming services

This classification ensures that AI innovations are promoted while simultaneously protecting safety and fundamental rights.

Timeline of the EU AI Act

As previously mentioned, the EU AI Act has been under discussion since 2021 and there is a detailed timeline for when the respective laws will come into force. New regulations for AI applications have been in effect since the beginning of this month. What else is to come?

Timeline for the act.

February 2, 2025: Ban of AI systems with unacceptable risks and implementation of AI literacy requirements (Chapters 1 & 2)

August 2, 2025: Implementation of governance rules and obligations for GPAI providers, as well as rules for notifications to authorities and fines (Chapters 3, 5, 7, 12 & Article 78)

August 2, 2026: End of the 24-month transitional period. Obligations for high-risk AI systems come into effect (Article 6(2) & Annex III)

August 2, 2027: Obligations for high-risk AI systems as safety components come into effect (Article 6(1), and the entire EU AI Act becomes applicable)

The timeline for the EU AI Act foresees that it will come into effect on August 1, 2024, and be fully applicable by August 2026. Certain provisions will be enforced earlier, with the bans on unacceptable AI systems becoming effective six months after enactment.

Enforcement and Penalties

Non-compliance with the Act can result in sanctions and fines of up to €35 million or 7% of a company’s global annual turnover. Specific penalties apply to high-risk AI systems as well as general AI models.

OMQ and the EU AI Act

Safety, transparency, compliance – all these essential components are combined in OMQ. Thus, all requirements of the EU AI Act are met. The OMQ Legacy Engine and OMQ LLM Engine are classified as low-risk AI systems and operate in compliance with the law.

The AI Act creates clarity and trust in the use of AI. With OMQ, our customers rely on a transparent and secure solution that ensures full control over generated content.Matthias Meisdrock, CEO of OMQ GmbH

Full Transparency

Every generated text can be traced. Customers can be assured that their queries are based on structured and verified knowledge databases.

Maximum Data Security

Our systems process data exclusively within the EU, in compliance with the GDPR, and without storage in external models.

These are legal foundations that are adhered to and carried out diligently. However, OMQ offers another major advantage: real-time adjustments.

Unlike many other AI providers, changes can be made to OMQ’s AI tools at any time. Information can be directly updated in the knowledge base and will be available in real time across all channels (Chatbot, E-Mail, Helpdesk).

Tips for OMQ Customers

To ensure you are optimally prepared for the AI Act, we recommend two measures that are easy and straightforward to implement:

The chatbot and AI-generated responses must be clearly identified as AI-generated. This can easily be implemented by having the chatbot clearly indicate in its initial message that it is an AI chatbot.

You can adjust this in the OMQ software in the “Chatbot” module under the “Standard Texts” tab. Avoid presenting the AI or the chatbot as human support.

Customers will increasingly ask questions about AI applications. Be prepared for questions such as: “Where is my input processed?” or “Does your software use LLMs? Is it AI Act-compliant?”

Answer: OMQ processes data securely within the EU and meets all AI Act requirements.

For more information, we can send you our AI Act Compliance.

Conclusion

The EU AI Act marks a pivotal step in the regulation of artificial intelligence within the European Union. It creates a clear legal framework to foster innovation while minimizing risks. By introducing a risk-based approach, AI systems are regulated according to their potential harm, with banned practices, high transparency requirements, and consumer protections at the forefront.

Companies developing or using AI must prepare for the new requirements early, as violations can lead to significant penalties. With its gradual implementation by 2027, the AI Act will set long-term standards for the safe and ethical use of AI – not only in the EU but also as a global model for future regulations.