Artificial Intelligence

What is Natural Language Processing? Definition and Application Examples

Natural Language Processing (NLP) enables computers to understand and process human language. Learn more about applications and techniques like machine learning and semantic analysis.

Natural Language Processing (NLP) is a revolutionary field of computer science and artificial intelligence that enables interaction between computers and human language.

Through NLP, machines can understand, interpret, and generate human language, fundamentally changing the way we interact with technology.

What is Natural Language Processing?

NLP, short for Natural Language Processing, is a subset of artificial intelligence focused on the ability of computers to understand and interpret human language.

The origins of NLP date back to the 1950s when the first rule-based systems were developed. Today, modern approaches rely on machine learning and deep learning techniques.

Simplified display of NLP.

How does NLP work?

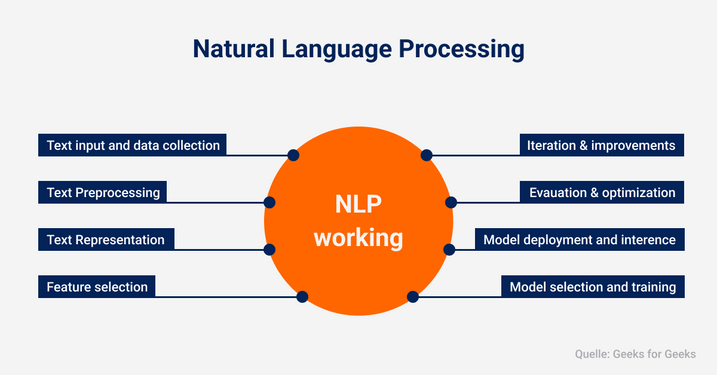

Natural Language Processing (NLP) operates through a combination of computer science, artificial intelligence, and linguistics to represent human language in a format understandable to machines. Here are the key components and techniques used in NLP:

- Computational Linguistics: This area focuses on rule-based modeling of human language. Data science techniques are used to understand and model human language in a way that machines can comprehend.

- Machine Learning (ML): ML plays a crucial role in NLP. ML algorithms enable computers to learn from data and make predictions or decisions without explicit programming.

- Deep Learning: A subfield of machine learning, deep learning uses artificial neural networks to model complex patterns in large amounts of data. This technique is essential for advanced NLP tasks like machine translation or speech recognition.

- Preprocessing: Before text data can be analyzed, it must be preprocessed. This includes tokenizing the text (breaking it down into words or phrases), removing stop words (common words like “and,” “the,” “is,” which are often irrelevant to analysis), stemming (reducing words to their root form), and lemmatization (bringing words to their base form).

- Syntax Analysis: Here, the grammatical structure of a sentence is examined. The goal of syntax analysis (also called parsing) is to identify the structure of a sentence by breaking it into components such as subject, predicate, and objects. This helps verify grammatical correctness and lays the groundwork for semantic analysis. Common techniques include context-free grammars and dependency parsing, which model relationships between words in a sentence.

- Semantic Analysis: Once the syntactic structure is identified, semantic analysis is crucial for understanding the meaning of a sentence. It involves capturing the contextual meaning of words and phrases by resolving ambiguities and analyzing the relationship between concepts. Techniques like Named Entity Recognition (NER) identify specific entities such as names, places, or organizations in a text, while sentiment analysis assesses the emotional tone of the text.

- Pragmatic Analysis: This phase of language processing examines the meaning of utterances in context, capturing intended meanings, irony, or references. It looks at language in the context of the situation in which it is used, analyzing aspects like conversational maxims or implicit statements.

- Word Vectors and Embeddings: To convert words into a format understandable by machines, they are often represented as vectors. Techniques like Word2Vec or GloVe convert words into numerical vectors that capture semantic similarities between words. These embeddings are particularly useful for recognizing semantic relationships and are used in many NLP applications such as machine translation or text classification.

- Transfer Learning and Pretrained Models: Modern NLP systems increasingly benefit from pretrained models like BERT, GPT, or T5, which are trained on large corpora and can capture context-based meanings of words across different tasks. These models are then fine-tuned for specific tasks, significantly improving the accuracy and efficiency of NLP systems.

Components that make NLP function.

How is NLP used?

NLP has a wide range of applications across various industries. Here are some key ones:

- Part-of-Speech Tagging: Identifying grammatical parts of words in a sentence.

- Named Entity Recognition (NER): Identifying and categorizing proper names in text.

- Word Sense Disambiguation: Determining the correct meaning of a word based on its context.

- Speech Recognition: Converting spoken language into text.

- Machine Translation: Translating text or speech from one language to another.

- Sentiment Analysis: Assessing the emotional tone of a text.

Applications of Natural Language Processing

Natural Language Processing (NLP) is used in a wide range of applications across various sectors, as it allows machines to understand and respond to human language. One of the most well-known applications is machine translation, where tools like Google Translate enable the real-time translation of text or speech from one language to another.

These services use complex models to not only understand individual words but also grasp the full context of a sentence to provide meaningful translations.

Another significant application of NLP is virtual assistants like Siri, Alexa, or Google Assistant. These systems use NLP to process spoken requests, understand the meaning behind words, and generate appropriate responses or carry out tasks such as setting reminders, sending messages, or controlling smart home devices.

In customer service, chatbots and automated response systems play an increasingly important role. These NLP-based systems can analyze customer inquiries and often respond in real time, helping businesses streamline and enhance their support processes. Advanced systems can even detect emotional nuances in requests and respond accordingly.

Another growing application is sentiment analysis, which is particularly useful for analyzing social media, customer reviews, or news articles. NLP models analyze the underlying sentiment of texts to determine whether a statement is positive, negative, or neutral. This is especially useful for companies that want to monitor brand perception or evaluate customer feedback.

Automatic text summarization is another practical use of NLP. This technology helps analyze long documents or articles and condenses them into shorter, concise summaries. This is especially useful in the news industry or scientific research, where large amounts of text need to be processed and understood efficiently.

In medicine, NLP is used to analyze unstructured data from patient records to better understand diagnoses and treatment courses. This technology also helps in the analysis of scientific literature to gain new insights more quickly and make better decisions in healthcare.

Conclusion

In summary, NLP is a key technology used in many everyday applications to facilitate interaction between humans and machines by allowing machines to process and meaningfully interpret natural language.

From translations and virtual assistants to automated customer services and medical research, NLP is transforming how we interact with technology and information.